One of the biggest technology trends of 2015 was virtual reality (VR), from Oculus Rift to Google’s cardboard headsets.

It is exciting to predict which killer app incorporating these technologies might become the next unicorn in 2016. But perhaps it is equally worthwhile to pause for a moment and ponder the implication of these technologies in the physical world, both in the near and distant future.

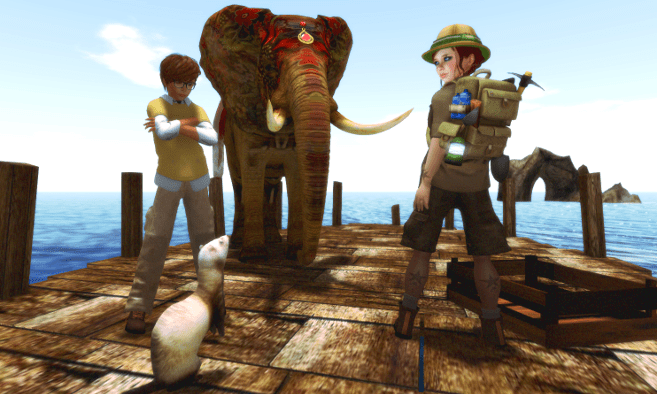

Some of you might be familiar with Linden Lab’s virtual world Second Life, where you can create an avatar of your own and explore a fantasy world with other users. Unfortunately, it never got past a million active players in the last 12 years.

Yet the speculation is that playing Second Life using a VR headset like Oculus Rift to get a totally immersive 3D experience might be the game changer: You see and navigate from your avatar’s perspective. Such integration can bring a whole new dimension in our digital social experience.

However, it is not yet that smooth to navigate in the virtual world; it will require a seamless integration of AI (artificial intelligence) algorithms. Dr. Ben Reinhardt, a robotics engineer at Magic Leap, points out, “The real intersection of VR/AR and AI is going to be world-modeling. It’s essential for a virtual avatar to walk around a place’s representation (VR) as well as for a virtual avatar (in the form of a hologram) to walk around the real place (AR) or for a robot to navigate through it (AI). These converging needs may drive the unification of how we create the real world’s digital shadow and unlock applications we never saw coming.”

However, according to Ross Finman, an AI researcher at MIT working on mapping and navigating robots in complex virtual environments, the big leap in technology will actually be “an extended model, where your avatar operates in the Virtual World even when you are not actively playing. Of course that requires the avatar has some sort of autonomy — and something more than Siri’s level of intelligence. It needs to have an adaptive learning capability that imitates you.”

It’s not hard to imagine a future where all data is consolidated into a very legit digital imprint of yourself.

Essentially, that means a virtual me that operates without my control. How does that work? In machine learning, researchers use a bunch of sensors to detect what excites you, saddens you, scares you, relaxes you… all fed into an algorithm. Kind of like you and your best friend knowing the small details about each other, except the computer never forgets and never stops paying attention.

Hanson Robotics has been doing extensive work in neural architecture creating what is called “mindfiles,” or putting human consciousness in digital files. Wearables that monitor everything from sleep to calories, hydration and stress levels can be used for continuously updating our digital imprints.

In fact, a startup called MedicalAvatar came up with a digital avatar that stores your medical data in it, from height, weight and blood pressure to lab test results. The implication is that such a data-enriched avatar can perhaps even provide a future prediction of your health and how you age, as well as be a life coach.

While the seamless working of an autonomous self is quite a while away, it is being extensively explored at universities (CMU, Stanford, MIT) and giant tech companies (Google, IBM Watson). Google and Amazon already know your favorite songs and movies, and your taste in food and clothing.

It’s not hard to imagine a future where all data is consolidated into a very legit digital imprint of yourself: a “cyberclone.” Where it gets creepy is your cyberclone could live in the virtual world despite your death in the real world. A cyber spirit? Would it compensate for your absence in the physical world?

Imitating human behavior we don’t understand ourselves is not yet quite possible (memories, love, physical pain, etc.). And to roboticists like us who see our robots break every day, it’s hard to imagine a self-sustaining avatar. But think how fast technology has grown in just 200 years, from no light bulbs to satellites imaging every square inch of the earth. On the scale of the universe, or even human existence, that’s no time at all!

Hawking, Gates and Musk all propose restrictions on AI research to avoid creating virtual beings we are unable to control.

Suppose we can replicate ourselves in the virtual world. Then it brings us to some questions that we probably should give some thought to. If a program can imitate us, aren’t we all just programs? Perhaps all the same program, with different parameters loaded? Load one set of numbers, you get me; another, you get you! Mixing data gives rise to new beings; tweak the program for virtual genetic engineering. Which means, companies like Genepeeks could use your virtual clones to create customized babies in the future!

If you think, as I do, that thoughts and feelings — not the physical body — define the person, and if every detail of that individuality is captured by a computer script, is the virtual being any less real than me? My cyberclone is me… except that “me” can travel the world in microseconds.

Multiple instances of my script (or multiple clones) means I could be talking to you in Tokyo and hiking the Appalachian Trail and having dinner in Paris — all at the same time! So would this be another me — but more powerful, more flexible and immortal — living in a parallel universe in cyberspace?

Initially, interaction with the physical world could be through holograms, enabled by companies like Magic Leap, plus robots directed by virtual people. But the virtual people don’t die, so that world’s population grows faster than the physical one. As time goes on, the physical world becomes less and less relevant.

What would the physical world even mean to the virtual people? They need to check some servers, fix some solar panels now and then, maybe throw some more silicon in the hopper. Mindless robots directed by virtual people can do that. All the serious thinking would be in the virtual world.

Experience would shape the avatars, just as it shapes us. Cyberclones will grow into unique entities as they interact with the virtual world. Eventually, your cyberclone won’t live by your rules. In fact, it would probably outright disobey. Recent research at Tufts University is working on exactly that: teaching robots to disobey humans if it’s harmful to them.

But our clones would inherit from us both good and bad traits. Love, honor and imagination… but also hate, envy and war. They won’t have to compete for the resources our ancestors did, and that we still do today. But there will be competition for memory space, CPU cycles — or whatever those concepts morph into.

Imagine terrorism in the virtual world, where pathogens — computer viruses turned deadly — can span the world in nanoseconds. We can only hope our cyberclones learn something we never have: how to resolve their conflicts peacefully.

Looking at AI in today’s technology — be it Siri or Amazon’s Echo — it is hard to imagine the future I described. It’s albeit a very distant extrapolation, but I’m not the only one making it: Hawking, Gates and Musk all propose restrictions on AI research to avoid creating virtual beings we are unable to control.

Brilliant minds like theirs cannot be completely wrong. If AI continues on its present path, it is possible that the virtual world might overtake and overwhelm us. If we want to prevent that, we should proactively define the boundaries.

Or we can accept cyberclones not as a threat, but simply the next generation.

Comments

Post a Comment